This tutorial uses lots of imports, mostly for loading the dataset(s).

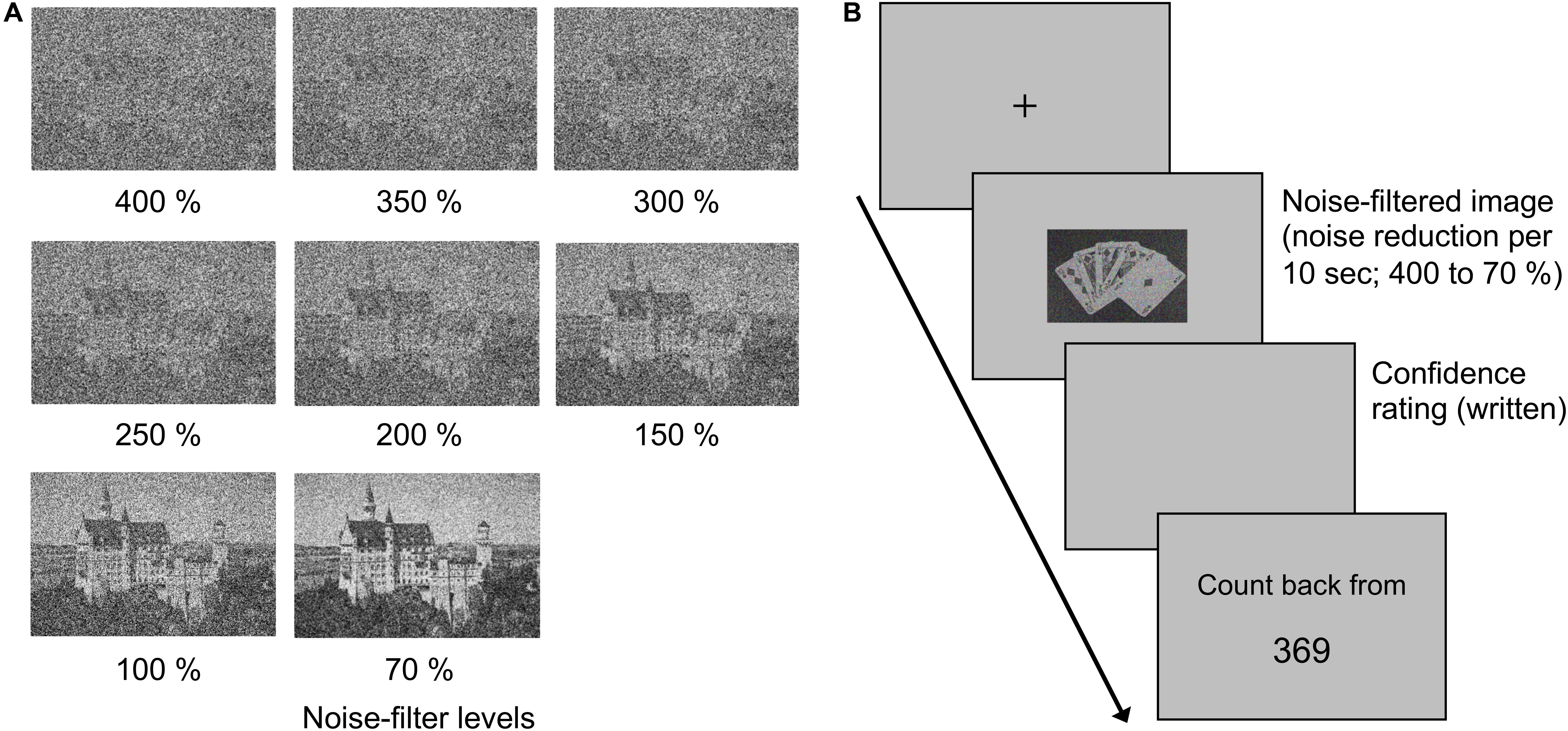

#Visual task psychopy example code install

Pip uninstall -y tensorflow estimator keras pip install -U tensorflow_text tensorflow tensorflow_datasets pip install einops Setup apt install -allow-change-held-packages libcudnn8=8.1.0.77-1+cuda11.2Į: Could not open lock file /var/lib/dpkg/lock-frontend - open (13: Permission denied)Į: Unable to acquire the dpkg frontend lock (/var/lib/dpkg/lock-frontend), are you root? It then uses the model to generate captions on new images. When you run the notebook, it downloads a dataset, extracts and caches the image features, and trains a decoder model. It uses self-attention to process the sequence being generated, and it uses cross-attention to attend to the image.īy inspecting the attention weights of the cross attention layers you will see what parts of the image the model is looking at as it generates words. The transformer decoder is mainly built from attention layers. Features are extracted from the image, and passed to the cross-attention layers of the Transformer-decoder. The model architecture built in this tutorial is shown below. To get the most out of this tutorial you should have some experience with text generation, seq2seq models & attention, or transformers. The model architecture used here is inspired by Show, Attend and Tell: Neural Image Caption Generation with Visual Attention, but has been updated to use a 2-layer Transformer-decoder. Given an image like the example below, your goal is to generate aĬaption such as "a surfer riding on a wave".

0 kommentar(er)

0 kommentar(er)